You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

Here are some findings about improving the reconstruction quality.

Bias terms in the geometry MLP matters

In my original implementation, I omitted the bias terms in the geometry MLP for simplicity as they're initialized to 0. However, I found that these bias terms are important for producing high quality surfaces especially for detailed regions. A possible reason is that the "shifting" brought by these biases acts as some form of normalization, making the high frequency signals easier to lean. Thanks @TerryRyu to mention this problem in #22. Fixed in latest commits.

MSE v.s. L1

Although the original NeuS paper adopts L1 as the photometric loss for its "robustness to outliars", we found that L1 could lead to suboptimal results in certain cases, like the Lego bulldozer:

L1, 20k iters

MSE, 20k iters

Therefore, we simultaneously adopt L1 and MSE loss in NeuS training.

Floater problem

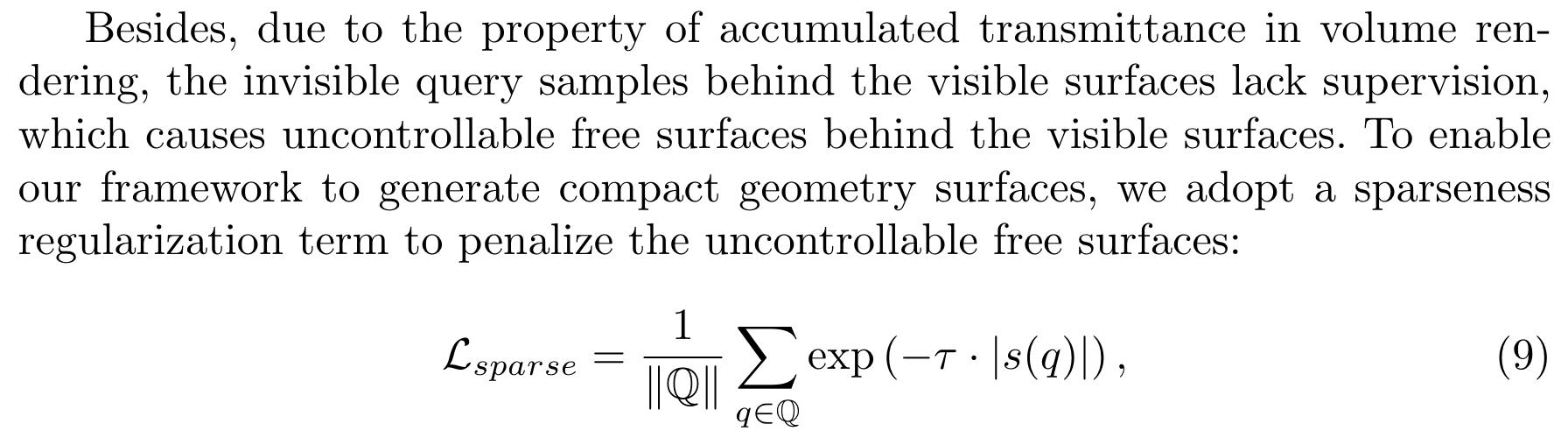

Training NeuS without background model can lead to floaters (uncontrolled surfaces) in free space. This is because floaters in background color do no harm to rendering quality, therefore cannot be optimized when training with only photometric loss. We alleviate this problem by random background augmentation (masks needed) and the sparsity loss proposed in SparseNeus (no masks needed):

For NeRF, we adopt the distortion loss proposed by MipNeRF 360 to alleviate the floater problem in training unbounded 360 scenes.

Complex cases require long training

In the given config files, the number of training steps is set to 20000 by default. This works well for objects of simple geometries, like the chair scene in the NeRF-Synthetic dataset. However, for more complicated cases where many thin structures occur, more training iterations are needed to get high quality results. This could simply be done by setting trainer.max_steps to a higher value, like 50000 or 100000.

Cases where training does not converge

A simple way to tell whether the training is converging is to check the value of inv_s (which is shown in the progress bar by default). If inv_s is steadily increasing (often ends up with >1000), then we are good. If the training diverges, inv_s typically drops below the initialized value and gets stuck. There are many reasons that could lead to divergence. To alleviate divergence caused by unstable optimization, we adopt an learning rate warm-up strategy following the original NeuS in latest commits.

The text was updated successfully, but these errors were encountered:

Repository owner

locked and limited conversation to collaborators

Dec 5, 2022

Here are some findings about improving the reconstruction quality.

Bias terms in the geometry MLP matters

In my original implementation, I omitted the bias terms in the geometry MLP for simplicity as they're initialized to 0. However, I found that these bias terms are important for producing high quality surfaces especially for detailed regions. A possible reason is that the "shifting" brought by these biases acts as some form of normalization, making the high frequency signals easier to lean. Thanks @TerryRyu to mention this problem in #22. Fixed in latest commits.

MSE v.s. L1

Although the original NeuS paper adopts L1 as the photometric loss for its "robustness to outliars", we found that L1 could lead to suboptimal results in certain cases, like the Lego bulldozer:

Therefore, we simultaneously adopt L1 and MSE loss in NeuS training.

Floater problem

Training NeuS without background model can lead to floaters (uncontrolled surfaces) in free space. This is because floaters in background color do no harm to rendering quality, therefore cannot be optimized when training with only photometric loss. We alleviate this problem by random background augmentation (masks needed) and the sparsity loss proposed in SparseNeus (no masks needed):

For NeRF, we adopt the distortion loss proposed by MipNeRF 360 to alleviate the floater problem in training unbounded 360 scenes.

Complex cases require long training

In the given config files, the number of training steps is set to

20000by default. This works well for objects of simple geometries, like thechairscene in the NeRF-Synthetic dataset. However, for more complicated cases where many thin structures occur, more training iterations are needed to get high quality results. This could simply be done by settingtrainer.max_stepsto a higher value, like50000or100000.Cases where training does not converge

A simple way to tell whether the training is converging is to check the value of

inv_s(which is shown in the progress bar by default). Ifinv_sis steadily increasing (often ends up with>1000), then we are good. If the training diverges,inv_stypically drops below the initialized value and gets stuck. There are many reasons that could lead to divergence. To alleviate divergence caused by unstable optimization, we adopt an learning rate warm-up strategy following the original NeuS in latest commits.The text was updated successfully, but these errors were encountered: